|

Customer:

Industry: |

Services:

Technologies: |

Tools(DevOps Specific):

Platform: |

Bringing Cloud Computing Benefits to Debt Collection

Axelerant helped this digital consumer credit lending company by migrating their on-premise application and data to Amazon Web Services. The migration helped them gain increased flexibility and agility, as well as rapid development and deployment.

About the customer

The customer has a decade of experience providing debt management solutions to lenders, financial institutions and debt collectors. Their solutions, that combine industry knowledge with artificial intelligence, enable lenders to better engage with customers and improve their collections and recovery.

Business Challenge

The client approached Axelerent when meeting the application's fast rate of growth became difficult for them with their existing on-premise infrastructure. Their major challenge was scalability and the ability to run and monitor systems to deliver business value and continually improve supportive processes and procedures.

Other challenges they wanted to solve included:

- Ability of the system to recover from infrastructure or service disruptions

- Ability to dynamically acquire computing resources to meet demand

- Mitigate disruptions such as misconfigurations and transient network issues

The client wanted to leverage the benefits of cloud computing to overcome these challenges, and they wanted to go with Amazon Web Services’ (AWS) cloud platform because of its reliability, breadth of services, and ease of adoption.

Their business needs were:

|

01. Design a highly available, scalable architecture to deploy on AWS |

02. Automate and streamline development and operation |

03. Improve cost efficiency |

04. Improve speed and agility |

Solution

Infrastructure Assessment

After considering various migration strategies and the client's business goals, the DevOps team at Axelerant recommended re-architecting the application.

We recommended a microservice architecture with levels of automation that would directly address the organization's business needs. We recommended that the customer move their application to Docker-based microservices using Amazon Elastic Container Service (Amazon ECS) as it is a highly secure, reliable and scalable way to run containers.

ECS allows the flexibility to use a mix of Amazon Elastic Compute Cloud (Amazon EC2) and AWS Fargate with spot and on-demand pricing options. We decided to move forward with Fargate as it provides serverless compute for containers. Fargate removes the need to provision and manage servers, and in this case, it best suited the client’s business requirements.

We decided to not put the database inside a container because of the transient nature of containers. Considering various aspects like scalability, durability, automated backups, and ease of migration from the existing database, we decided to use Amazon's relational database service, Amazon RDS.

Breaking up a Monolith

The major step involved in rearchitecting the application was to break the monolithic application into smaller services, where each microservice could be run as a Docker container. In this microservice architecture, each service only handles a single feature. Different instances can run different combinations of containers with different capabilities, and the load balancer will always direct the right traffic to the right port on the right instance.

The DevOps team at Axelerant worked with the customer’s team to understand the various components of the application, created Dockerfiles for each component, and built Docker images which can be used in deploying the application on ECS.

Results

Running the application as containers using AWS ECS with Fargate improved scalability tremendously. Fargate provides the containers and scales them to closely match resource requirements. Also, Fargate eliminated the need to provision and manage servers manually. This helped by allowing developers to focus on software rather than managing servers.

AWS CloudFormation gave us a chance to provision application resources in a repeatable way, allowing us to build and rebuild the infrastructure without any manual actions needed. We created AWS CloudFormation templates that acted as a single source of truth and helped us standardize infrastructure components, enabling configuration compliance and faster troubleshooting.

Using Amazon ECS with Fargate ensured availability zone spread while removing the complexity of managing EC2 infrastructure. ECS Tasks in a Replica Service are balanced across availability zones ensuring high-availability of the application. Launching Amazon RDS in Multi-AZ mode provided enhanced availability. In the case of infrastructure failover, Amazon RDS performs an automatic failover to the standby instance.

Amazon Elastic Container Registry (ECR)

In order to run the containers in the ECS cluster, they must be stored in a scalable container registry. We selected Amazon Elastic Container Registry (ECR) for this purpose, as it is a fully-managed Docker container registry that makes it easy to store, manage, and deploy Docker container images.

Development Automation

Once the application was dockerized, we started working on developing CloudFormation templates. An AWS CloudFormation template is simply a JSON or YAML-formatted text file that describes the AWS infrastructure needed to run the application or service, along with any interconnection between them. We created a CloudFormation template which launches an ECS cluster with Fargate launch type. We created another CloudFormation template to launch Amazon RDS for the database.

Database Migration

We used AWS Database Migration Service to migrate the on-premises database to Amazon RDS. With the help of AWS Database Migration Service, we were able to migrate data from the existing Microsoft SQL Server to Amazon RDS for SQL Server. By selecting the Multi-AZ option during the migration process, we were able to perform a multi-AZ deployment with a primary instance in one availability zone and a standby instance in another AZ.

Deployment Overview

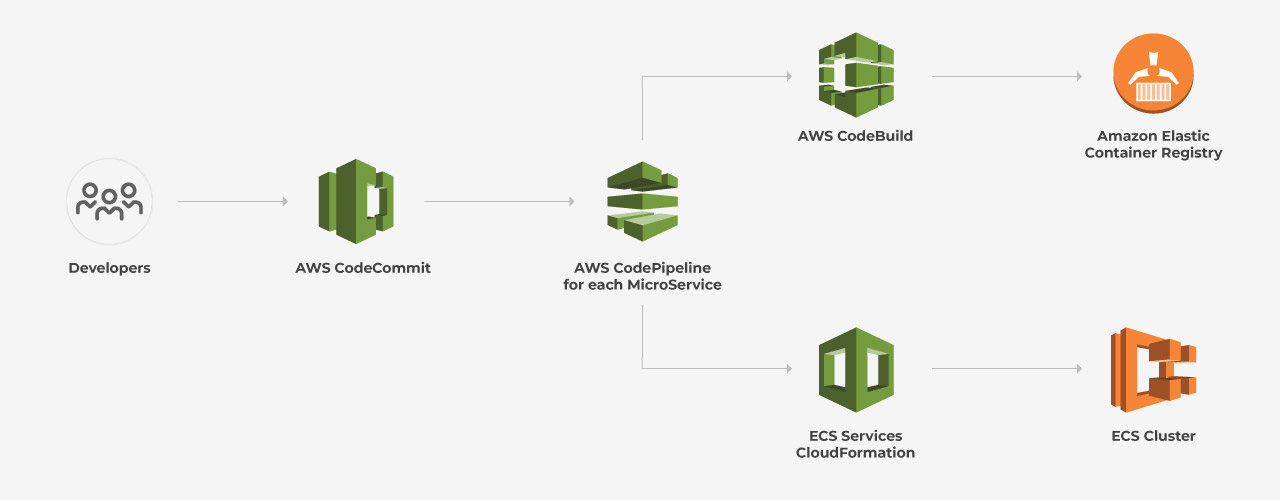

For deploying changes to the existing environment, we used AWS CodeCommit, AWS CodePipeline, AWS CodeBuild services. As a first step in the continuous delivery pipeline, we created separate AWS CodeCommit repositories for each microservice, and we created an AWS CodePipeline for each repository, so each microservice could be separately built and deployed onto the ECS cluster.

The Dockerfiles created earlier were placed in the repository of the corresponding microservice.

For each microservice, we created its own ecs-service-config.json, which AWS CodePipeline uses to deploy the ECS task and service.

Every CodePipeline consists of 4 stages:

Above: CI/CD within AWS using CodePipeline and deploying on ECS Cluster

Stage 1. AWS CodeCommit

AWS CodeCommit is a fully-managed source control service that hosts secure Git-based repositories. It makes it easy for teams to collaborate on code in a secure and highly scalable ecosystem. We created a separate repository in AWS CodeCommit for each microservice. A Dockerfile for each microservice and an ecs-service-config.json file is placed along with the source code in every repository.

AWS Cloudformation templates for creating ECS task definitions are placed in a S3 bucket. AWS CodePipeline uses this S3 bucket as a source for the CloudFormation template that deploys each microservice as an ECS service.

Stage 2. Build (AWS CodeBuild)

AWS CodeBuild is a fully managed build service that compiles source code, runs tests, and produces software packages that are ready to deploy. In this case, CodeBuild takes the Dockerfile present in the source code repository and builds a Docker image from it. Once the Docker image is built, that is pushed to the Elastic Container Registry.

To avoid installing Java SDK and other dependencies each time we build our project, we have created a custom build environment which is pre-installed with all dependencies.

Stage 3. Approval

We configured AWS CodePipelines with a manual approval step. Once the approval step is completed, CodePipeline deploys the ECS Service.

Stage 4. Deploy to ECS Cluster

Once the manual approval is completed, AWS CodePipeline will deploy the changes by updating the CloudFormation stack. Pipeline fetches the CloudFormation template from the S3 bucket, pulls the image from the Elastic Container Registry and updates the ECS Stack.

We used ecs-service-config.json file to set the path-based routing and routing priority of each microservice. Application Load Balancer was used to perform path-based routing. This JSON file is used by AWS CodePipeline parameter override during the deployment stage.

Database Backups

We used Amazon RDS automated backup strategy for taking backups of the database instance. We selected a backup window for this and a seven-day backup retention period to save these automated backups. As we configured the database in multiple-AZ mode, backups occur on the standby instance to reduce the impact on the primary. Enabling the automated backup option also captures transaction logs to S3 every five minutes. Archiving these transaction logs gave us a chance to restore the database to any point of time.