Introduction

Containers have changed the way software applications are created, deployed, and managed. While Kubernetes has changed the software development lifecycle paradigm, this blog post will demonstrate how applications that run on Virtual Machines could be containerized and run as Microservices on Kubernetes.

Mautic application is a leading Open Source Marketing Automation platform. It’s an awesome application for organizations small and large to run marketing campaigns. However, there have been challenges with scalability during intensive marketing campaigns and maintenance of the application viz., deploying periodic updates with minimal downtime. Therefore, we will discuss how these challenges can be addressed by containerizing the applications and what are the considerations to make an application cloud-native.

Mautic is not enterprise-ready and not in line with the 12-factor application philosophy. There are multiple configuration files to inject environment variables. Mautic has an open-source image but it is hardly ready to be deployed on Kubernetes. The ephemeral and persistent components such as logs, cache are not decoupled. The existing Mautic image also runs multiple processes in the same container, which is an anti-pattern of containerization.

Typically, the Mautic setup has an application tier - where Mautic and Nginx run together on a set of servers frontended by a Load balancer, MySQL Database, and a Messaging Queue. There are a set of cron jobs that are configured along with the application on the app tier servers.

To make Mautic application Enterprise-ready and highly available and scalable, the following approach has been adopted:

- Dockerizing the application in line with the twelve-factor app methodology

- Deploying the application with helm - a Package Manager for Kubernetes

- Set-up CI/CD pipeline using gitlabci to create an environment per branch (multi-dev builds)

In line with the containerization best practices, the web tier and the application tier have been decoupled. Nginx and Mautic run on respective containers and they both share a common volume that contains the application code.

Mautic’s functionality can be extended by using plugins. To make the application extensible by adding plugins and customizations, the docker image runs the composer install every time the code-base is changed. This helps developers add the plugins and extensions and lets the composer include them along with the application.

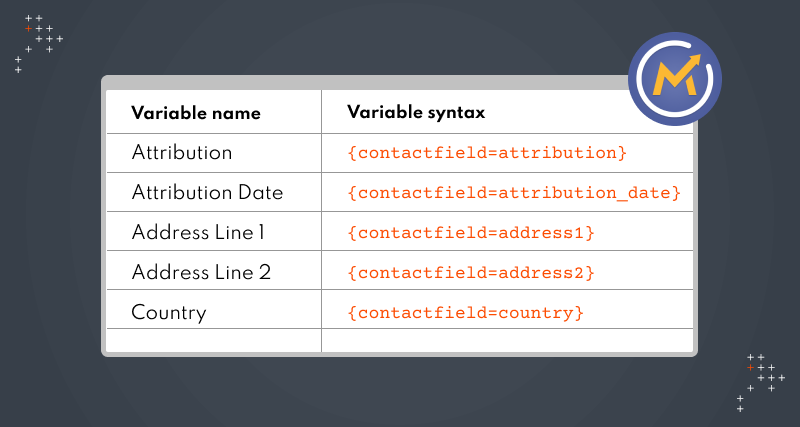

The configuration for Mautic application is spread across multiple files; this has been addressed by using config.php as the single config file and injecting config values through container’s environment variables.

Security is paramount when containerizing the applications; with that in view, the containers are set to run as unprivileged users.

The containerization of the application is a battle half-won. To make it truly enterprise-ready, there should be a mechanism to deploy the application with ease and create a reusable configuration. This can be achieved with helm - Package Manager for Kubernetes. Helm charts make it easy to define all the Kubernetes objects such as deployments, services, ingress, secrets, and persistent volumes in a modular way. Helm charts provide a single config file called values.yaml to input all the variables for the deployment, such as the number of replicas of the pods, image repositories, secrets, environment variables, and various other inputs required for application deployment. So, by modifying this helm chart, the entire deployment can be repurposed by a different team or an organization, without having to fiddle with the Kubernetes manifest files.

Database

The Mautic helm chart lets you choose between a local MySQL service (running as Pods on Kubernetes) or a managed database service like RDS or Azure SQL. The default setting is to create a local DB, however, if you supply the hostname of your external DB service as a value for `ExternalDb.host`, then the application would use the externalDBHost and the helm chart wouldn’t create any local DB service. When using the external DB, ensure the DB is accessible to the Kubernetes and credentials are passed as helm values.

CI/CD Pipeline

When you think of CI/CD for Kubernetes, GitLab is the obvious choice. There are various reasons, the primary one being that the GitlabCI is an excellent way to create a declarative pipeline. CI/CD pipeline is defined in a YAML file named .gitlab-ci.yml. The pipeline consists of various stages, each performing a certain action, such as building a Docker image or deploying the image onto the servers. This file is easy to read and defines the stages.

GitlabCI is like a swiss army knife with various options that help to make pipelines as extensible and modular as possible. Kubernetes cluster can be quite integrated seamlessly with GitLab.

Let’s look at the CI/CD that has been set up for Mautic.

Continuous Integration (CI)

There are two stages of CI:

- dev-build: Invoked when code is committed to the non-master branch

- prod-build: Invoked when code is merged with Master Branch

In the dev-build stage, Mautic and Mautic Nginx is built from the branch to which the code has been committed. The images are tagged with the CI_COMMIT_SHORT_SHA. For instance, if the code is checked in to `feature-123`, and the git CI_COMMIT_SHA is 7fdadfdafdfdaf, docker images are built with the tag 7fdad. Once the images are built and tagged, they’re pushed to AWS ECR.

In the prod-build step, Mautic and Mautic Nginx are built from the master branch The images are tagged with the tag CI_COMMIT_SHORT_SHA and also the tag latest. For instance, git CI_COMMIT_SHA is 8gbfe3ewytiuy; docker images are built with the tag 8gbfe and also the latest. Once the images are built and tagged, they’re pushed to AWS ECR.

Continuous Delivery (CD)

Just like the stages in CI, there are two stages in CD.

There are two deploy stages:

- dev-deploy: Invoked when code is committed to the non-master branch

- Prod-deploy: Invoked when code is merged with Master Branch

In the dev-deploy stage, the images with the tag CI_COMMIT_SHORT_SHA from ECR are pulled and deployed onto Kubernetes. Every time code is checked into a non-master branch, a new namespace is created in the Kubernetes with the branch name using the GitLab variable CI_COMMIT_BRANCH and the application is deployed into that namespace. This is equivalent to creating a new environment per branch.

The dev-deploy step replaces two values with the Environment Variables making the namespaces and domain names dynamic.

The namespace is replaced with the CI_COMMIT_BRANCH variable of GitLab and the hostname is also prefixed with the same. Thus for every branch, a corresponding sub-domain is created and an ingress controller is created. For instance, if the code is deployed from feature-123, a new namespace called feature-123 is created in the Kubernetes cluster and the application a new ingress is created with hostname feature-123.domainname.com. This helps in comprehensive testing of the application at every branch.

The Prod-deploy does the same thing as dev-deploy, except that the code is deployed onto the production namespace by pulling the images with the latest tag.

While it’s easy to manage all the variables from `values.yaml` file, in view of the security, GitLab variables are used for sensitive values such as DB password, Mailer password, etc. helm provides `--set` to override the values defined in `values.yaml`. The deployment stages described above also use GitLab variables to pass sensitive data.

Once the pipeline is created, all that needs to be done is to integrate Kubernetes with GitLab and start deploying the application. By updating the values of the replicas in the values.yaml, you could scale up or down the number of pods to be run - based on the anticipated load.

Conclusion

This demonstrates that with the right approach and the right set of tools, legacy applications can be containerized and run on Kubernetes with increased efficiency and availability. While containerization makes your application truly cloud-native, an efficient CI/CD pipeline that supports multi-dev deployments makes it not only easy to deploy your application but also test in multiple environments with varied configurations.

We respect your privacy. Your information is safe.

We respect your privacy. Your information is safe.

Leave us a comment